1.1. Acquiring SUNDIALS

There are two supported ways for building and installing SUNDIALS from source. One option is to use the Spack HPC package manager:

spack install sundials

The second supported option for building and installing SUNDIALS is with CMake.

Before proceeding with CMake, the source code must be downloaded. This can be done

by cloning the SUNDIALS GitHub repository

(run git clone https://github.com/LLNL/sundials), or by downloading the

SUNDIALS release compressed archives (.tar.gz) from the SUNDIALS

website.

The compressed archives allow for downloading of indvidual SUNDIALS packages.

The name of the distribution archive is of the form

SOLVER-X.Y.Z.tar.gz, where SOLVER is one of: sundials, cvode,

cvodes, arkode, ida, idas, or kinsol, and X.Y.Z

represents the version number (of the SUNDIALS suite or of the individual

solver). After downloading the relevant archives, uncompress and expand the sources,

by running

% tar -zxf SOLVER-X.Y.Z.tar.gz

This will extract source files under a directory SOLVER-X.Y.Z.

Starting with version 2.6.0 of SUNDIALS, CMake is the only supported method of installation. The explanations of the installation procedure begin with a few common observations:

The remainder of this chapter will follow these conventions:

SOLVERDIRis the directorySOLVER-X.Y.Zcreated above; i.e. the directory containing the SUNDIALS sources.BUILDDIRis the (temporary) directory under which SUNDIALS is built.INSTDIRis the directory under which the SUNDIALS exported header files and libraries will be installed. Typically, header files are exported under a directoryINSTDIR/includewhile libraries are installed underINSTDIR/lib, withINSTDIRspecified at configuration time.For SUNDIALS’ CMake-based installation, in-source builds are prohibited; in other words, the build directory

BUILDDIRcan not be the same asSOLVERDIRand such an attempt will lead to an error. This prevents “polluting” the source tree and allows efficient builds for different configurations and/or options.The installation directory

INSTDIRcan not be the same as the source directorySOLVERDIR.By default, only the libraries and header files are exported to the installation directory

INSTDIR. If enabled by the user (with the appropriate toggle for CMake), the examples distributed with SUNDIALS will be built together with the solver libraries but the installation step will result in exporting (by default in a subdirectory of the installation directory) the example sources and sample outputs together with automatically generated configuration files that reference the installed SUNDIALS headers and libraries. As such, these configuration files for the SUNDIALS examples can be used as “templates” for your own problems. CMake installsCMakeLists.txtfiles and also (as an option available only under Unix/Linux)Makefilefiles. Note this installation approach also allows the option of building the SUNDIALS examples without having to install them. (This can be used as a sanity check for the freshly built libraries.)

Further details on the CMake-based installation procedures, instructions for manual compilation, and a roadmap of the resulting installed libraries and exported header files, are provided in §1.2 and §1.2.8.

1.2. Building and Installing with CMake

CMake-based installation provides a platform-independent build system. CMake can generate Unix and Linux Makefiles, as well as KDevelop, Visual Studio, and (Apple) XCode project files from the same configuration file. In addition, CMake also provides a GUI front end and which allows an interactive build and installation process.

The SUNDIALS build process requires CMake version 3.12.0 or higher and a working

C compiler. On Unix-like operating systems, it also requires Make (and

curses, including its development libraries, for the GUI front end to CMake,

ccmake or cmake-gui), while on Windows it requires Visual Studio. While

many Linux distributions offer CMake, the version included may be out of date.

CMake adds new features regularly, and you should download the

latest version from http://www.cmake.org. Build instructions for CMake (only

necessary for Unix-like systems) can be found on the CMake website. Once CMake

is installed, Linux/Unix users will be able to use ccmake or cmake-gui

(depending on the version of CMake), while Windows users will be able to use

CMakeSetup.

As previously noted, when using CMake to configure, build and install SUNDIALS,

it is always required to use a separate build directory. While in-source builds

are possible, they are explicitly prohibited by the SUNDIALS CMake scripts (one

of the reasons being that, unlike autotools, CMake does not provide a make

distclean procedure and it is therefore difficult to clean-up the source tree

after an in-source build). By ensuring a separate build directory, it is an easy

task for the user to clean-up all traces of the build by simply removing the

build directory. CMake does generate a make clean which will remove files

generated by the compiler and linker.

1.2.1. Configuring, building, and installing on Unix-like systems

The default CMake configuration will build all included solvers and associated

examples and will build static and shared libraries. The INSTDIR defaults to

/usr/local and can be changed by setting the CMAKE_INSTALL_PREFIX

variable. Support for FORTRAN and all other options are disabled.

CMake can be used from the command line with the cmake command, or from a

curses-based GUI by using the ccmake command, or from a wxWidgets or

QT based GUI by using the cmake-gui command. Examples for using both text

and graphical methods will be presented. For the examples shown it is assumed

that there is a top level SUNDIALS directory with appropriate source, build and

install directories:

$ mkdir (...)/INSTDIR

$ mkdir (...)/BUILDDIR

$ cd (...)/BUILDDIR

1.2.1.1. Building with the GUI

Using CMake with the ccmake GUI follows the general process:

Select and modify values, run configure (

ckey)New values are denoted with an asterisk

To set a variable, move the cursor to the variable and press enter

If it is a boolean (ON/OFF) it will toggle the value

If it is string or file, it will allow editing of the string

For file and directories, the

<tab>key can be used to complete

Repeat until all values are set as desired and the generate option is available (

gkey)Some variables (advanced variables) are not visible right away; to see advanced variables, toggle to advanced mode (

tkey)To search for a variable press the

/key, and to repeat the search, press thenkey

Using CMake with the cmake-gui GUI follows a similar process:

Select and modify values, click

ConfigureThe first time you click

Configure, make sure to pick the appropriate generator (the following will assume generation of Unix Makfiles).New values are highlighted in red

To set a variable, click on or move the cursor to the variable and press enter

If it is a boolean (

ON/OFF) it will check/uncheck the boxIf it is string or file, it will allow editing of the string. Additionally, an ellipsis button will appear

...on the far right of the entry. Clicking this button will bring up the file or directory selection dialog.For files and directories, the

<tab>key can be used to complete

Repeat until all values are set as desired and click the

GeneratebuttonSome variables (advanced variables) are not visible right away; to see advanced variables, click the

advancedbutton

To build the default configuration using the curses GUI, from the BUILDDIR

enter the ccmake command and point to the SOLVERDIR:

$ ccmake (...)/SOLVERDIR

Similarly, to build the default configuration using the wxWidgets GUI, from the

BUILDDIR enter the cmake-gui command and point to the SOLVERDIR:

$ cmake-gui (...)/SOLVERDIR

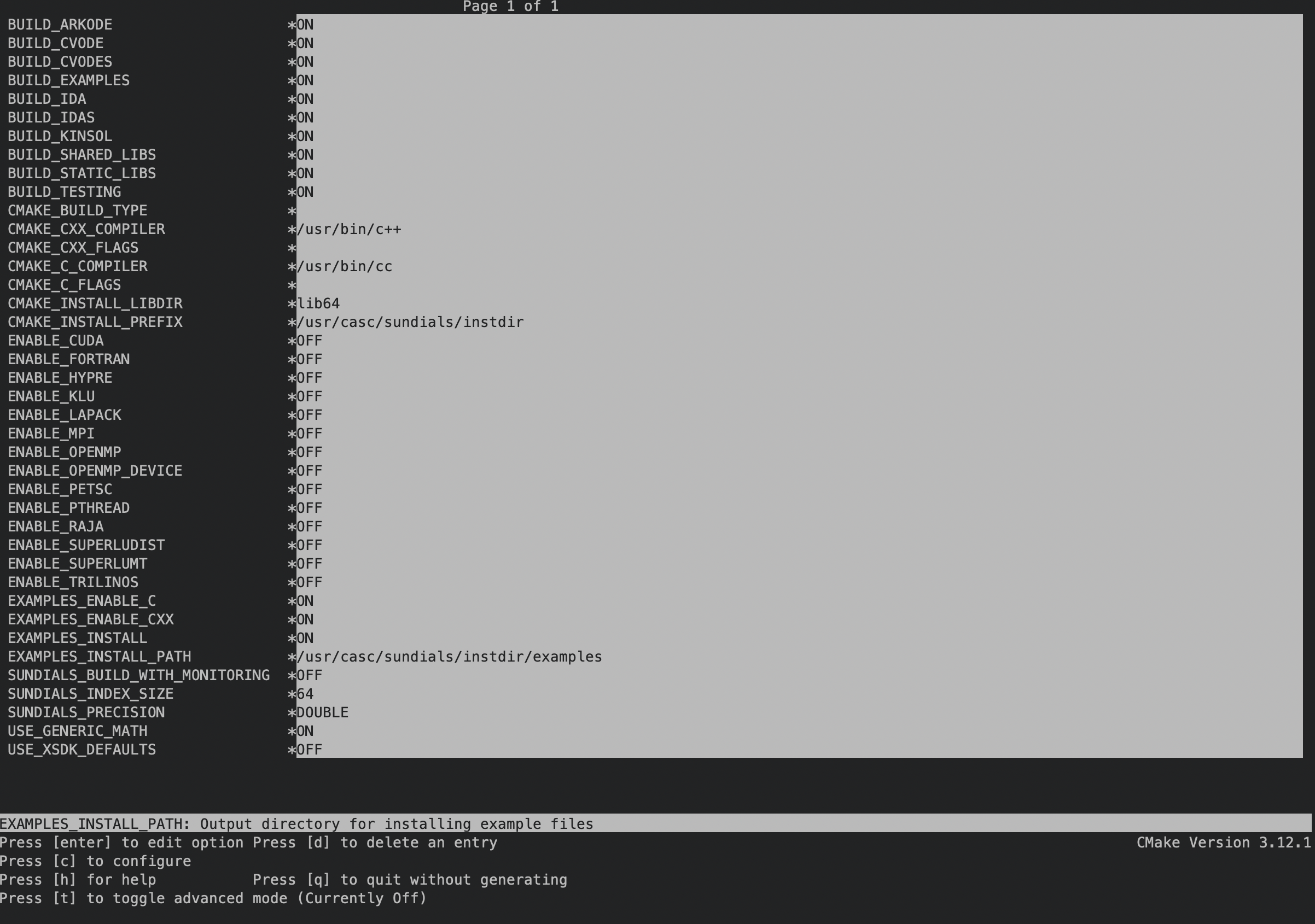

The default curses configuration screen is shown in the following figure.

Fig. 1.2 Default configuration screen. Note: Initial screen is empty. To get this default configuration, press ‘c’ repeatedly (accepting default values denoted with asterisk) until the ‘g’ option is available.

The default INSTDIR for both SUNDIALS and the corresponding examples can be changed

by setting the CMAKE_INSTALL_PREFIX and the EXAMPLES_INSTALL_PATH as

shown in the following figure.

Fig. 1.3 Changing the INSTDIR for SUNDIALS and corresponding EXAMPLES.

Pressing the g key or clicking generate will generate Makefiles

including all dependencies and all rules to build SUNDIALS on this system. Back

at the command prompt, you can now run:

$ make

or for a faster parallel build (e.g. using 4 threads), you can run

$ make -j 4

To install SUNDIALS in the installation directory specified in the configuration, simply run:

$ make install

1.2.1.2. Building from the command line

Using CMake from the command line is simply a matter of specifying CMake

variable settings with the cmake command. The following will build the

default configuration:

$ cmake -DCMAKE_INSTALL_PREFIX=/home/myname/sundials/instdir \

> -DEXAMPLES_INSTALL_PATH=/home/myname/sundials/instdir/examples \

> ../srcdir

$ make

$ make install

1.2.2. Configuration options (Unix/Linux)

A complete list of all available options for a CMake-based SUNDIALS configuration is provide below. Note that the default values shown are for a typical configuration on a Linux system and are provided as illustration only.

- BUILD_ARKODE

Build the ARKODE library

Default:

ON

- BUILD_CVODE

Build the CVODE library

Default:

ON

- BUILD_CVODES

Build the CVODES library

Default:

ON

- BUILD_IDA

Build the IDA library

Default:

ON

- BUILD_IDAS

Build the IDAS library

Default:

ON

- BUILD_KINSOL

Build the KINSOL library

Default:

ON

- BUILD_SHARED_LIBS

Build shared libraries

Default:

ON

- BUILD_STATIC_LIBS

Build static libraries

Default:

ON

- CMAKE_BUILD_TYPE

Choose the type of build, options are:

Debug,Release,RelWithDebInfo, andMinSizeRelDefault:

RelWithDebInfoNote

Specifying a build type will trigger the corresponding build type specific compiler flag options below which will be appended to the flags set by

CMAKE_<language>_FLAGS.

- CMAKE_C_COMPILER

C compiler

Default:

/usr/bin/cc

- CMAKE_C_FLAGS

Flags for C compiler

Default:

- CMAKE_C_FLAGS_DEBUG

Flags used by the C compiler during debug builds

Default:

-g

- CMAKE_C_FLAGS_MINSIZEREL

Flags used by the C compiler during release minsize builds

Default:

-Os -DNDEBUG

- CMAKE_C_FLAGS_RELEASE

Flags used by the C compiler during release builds

Default:

-O3 -DNDEBUG

- CMAKE_C_STANDARD

The C standard to build C parts of SUNDIALS with.

Default: 99

Options: 99, 11, 17.

- CMAKE_C_EXTENSIONS

Enable compiler specific C extensions.

Default:

OFF

- CMAKE_CXX_COMPILER

C++ compiler

Default:

/usr/bin/c++Note

A C++ compiler is only required when a feature requiring C++ is enabled (e.g., CUDA, HIP, SYCL, RAJA, etc.) or the C++ examples are enabled.

All SUNDIALS solvers can be used from C++ applications without setting any additional configuration options.

- CMAKE_CXX_FLAGS

Flags for C++ compiler

Default:

- CMAKE_CXX_FLAGS_DEBUG

Flags used by the C++ compiler during debug builds

Default:

-g

- CMAKE_CXX_FLAGS_MINSIZEREL

Flags used by the C++ compiler during release minsize builds

Default:

-Os -DNDEBUG

- CMAKE_CXX_FLAGS_RELEASE

Flags used by the C++ compiler during release builds

Default:

-O3 -DNDEBUG

- CMAKE_CXX_STANDARD

The C++ standard to build C++ parts of SUNDIALS with.

Default: 11

Options: 98, 11, 14, 17, 20.

- CMAKE_CXX_EXTENSIONS

Enable compiler specific C++ extensions.

Default:

OFF

- CMAKE_Fortran_COMPILER

Fortran compiler

Default:

/usr/bin/gfortranNote

Fortran support (and all related options) are triggered only if either Fortran-C support (

BUILD_FORTRAN_MODULE_INTERFACE) or LAPACK (ENABLE_LAPACK) support is enabled.

- CMAKE_Fortran_FLAGS

Flags for Fortran compiler

Default:

- CMAKE_Fortran_FLAGS_DEBUG

Flags used by the Fortran compiler during debug builds

Default:

-g

- CMAKE_Fortran_FLAGS_MINSIZEREL

Flags used by the Fortran compiler during release minsize builds

Default:

-Os

- CMAKE_Fortran_FLAGS_RELEASE

Flags used by the Fortran compiler during release builds

Default:

-O3

- CMAKE_INSTALL_LIBDIR

The directory under which libraries will be installed.

Default: Set based on the system:

lib,lib64, orlib/<multiarch-tuple>

- CMAKE_INSTALL_PREFIX

Install path prefix, prepended onto install directories

Default:

/usr/localNote

The user must have write access to the location specified through this option. Exported SUNDIALS header files and libraries will be installed under subdirectories

includeandlibofCMAKE_INSTALL_PREFIX, respectively.

- ENABLE_CUDA

Build the SUNDIALS CUDA modules.

Default:

OFF

- CMAKE_CUDA_ARCHITECTURES

Specifies the CUDA architecture to compile for.

Default:

sm_30

- EXAMPLES_ENABLE_C

Build the SUNDIALS C examples

Default:

ON

- EXAMPLES_ENABLE_CXX

Build the SUNDIALS C++ examples

Default:

OFF

- EXAMPLES_ENABLE_CUDA

Build the SUNDIALS CUDA examples

Default:

OFFNote

You need to enable CUDA support to build these examples.

- EXAMPLES_ENABLE_F2003

Build the SUNDIALS Fortran2003 examples

Default:

ON(ifBUILD_FORTRAN_MODULE_INTERFACEisON)

- EXAMPLES_INSTALL

Install example files

Default:

ONNote

This option is triggered when any of the SUNDIALS example programs are enabled (

EXAMPLES_ENABLE_<language>isON). If the user requires installation of example programs then the sources and sample output files for all SUNDIALS modules that are currently enabled will be exported to the directory specified byEXAMPLES_INSTALL_PATH. A CMake configuration script will also be automatically generated and exported to the same directory. Additionally, if the configuration is done under a Unix-like system, makefiles for the compilation of the example programs (using the installed SUNDIALS libraries) will be automatically generated and exported to the directory specified byEXAMPLES_INSTALL_PATH.

- EXAMPLES_INSTALL_PATH

Output directory for installing example files

Default:

/usr/local/examplesNote

The actual default value for this option will be an

examplessubdirectory created underCMAKE_INSTALL_PREFIX.

- BUILD_FORTRAN_MODULE_INTERFACE

Enable Fortran 2003 interface

Default:

OFF

- SUNDIALS_LOGGING_LEVEL

Set the maximum logging level for the SUNLogger runtime API. The higher this is set, the more output that may be logged, and the more performance may degrade. The options are:

0– no logging1– log errors2– log errors + warnings3– log errors + warnings + informational output4– log errors + warnings + informational output + debug output5– log all of the above and even more (e.g. vector valued variables may be logged)

Default: 2

- SUNDIALS_BUILD_WITH_MONITORING

Build SUNDIALS with capabilties for fine-grained monitoring of solver progress and statistics. This is primarily useful for debugging.

Default: OFF

Warning

Building with monitoring may result in minor performance degradation even if monitoring is not utilized.

- SUNDIALS_BUILD_WITH_PROFILING

Build SUNDIALS with capabilties for fine-grained profiling. This requires POSIX timers or the Windows

profileapi.htimers.Default: OFF

Warning

Profiling will impact performance, and should be enabled judiciously.

- SUNDIALS_ENABLE_ERROR_CHECKS

Build SUNDIALS with more extensive checks for unrecoverable errors.

Default:

OFFwhenCMAKE_BUILD_TYPE=Release|RelWithDebInfo `` and ``ONotherwise.Warning

Error checks will impact performance, but can be helpful for debugging.

- SUNDIALS_ENABLE_EXTERNAL_ADDONS

Build SUNDIALS with any external addons that you have put in

sundials/external.Default:

OFFWarning

Addons are not maintained by the SUNDIALS team. Use at your own risk.

- ENABLE_GINKGO

Enable interfaces to the Ginkgo linear algebra library.

Default:

OFF

- Ginkgo_DIR

Path to the Ginkgo installation.

Default: None

- SUNDIALS_GINKGO_BACKENDS

Semi-colon separated list of Ginkgo target architecutres/executors to build for. Options currenty supported are REF (the Ginkgo reference executor), OMP, CUDA, HIP, and DPC++.

Default: “REF;OMP”

- ENABLE_KOKKOS

Enable the Kokkos based vector.

Default:

OFF

- Kokkos_DIR

Path to the Kokkos installation.

Default: None

- ENABLE_KOKKOS_KERNELS

Enable the Kokkos based dense matrix and linear solver.

Default:

OFF

- KokkosKernels_DIR

Path to the Kokkos-Kernels installation.

Default: None

- ENABLE_HIP

Enable HIP Support

Default:

OFF

- AMDGPU_TARGETS

Specify which AMDGPU processor(s) to target.

Default: None

- ENABLE_HYPRE

Flag to enable hypre support

Default:

OFFNote

See additional information on building with hypre enabled in §1.2.4.

- HYPRE_INCLUDE_DIR

Path to hypre header files

Default: none

- HYPRE_LIBRARY

Path to hypre installed library files

Default: none

- ENABLE_KLU

Enable KLU support

Default:

OFFNote

See additional information on building with KLU enabled in §1.2.4.

- KLU_INCLUDE_DIR

Path to SuiteSparse header files

Default: none

- KLU_LIBRARY_DIR

Path to SuiteSparse installed library files

Default: none

- ENABLE_LAPACK

Enable LAPACK support

Default:

OFFNote

Setting this option to

ONwill trigger additional CMake options. See additional information on building with LAPACK enabled in §1.2.4.

- LAPACK_LIBRARIES

LAPACK (and BLAS) libraries

Default:

/usr/lib/liblapack.so;/usr/lib/libblas.soNote

CMake will search for libraries in your

LD_LIBRARY_PATHprior to searching default system paths.

- ENABLE_MAGMA

Enable MAGMA support.

Default:

OFFNote

Setting this option to

ONwill trigger additional options related to MAGMA.

- MAGMA_DIR

Path to the root of a MAGMA installation.

Default: none

- SUNDIALS_MAGMA_BACKENDS

Which MAGMA backend to use under the SUNDIALS MAGMA interface.

Default:

CUDA

- ENABLE_MPI

Enable MPI support. This will build the parallel nvector and the MPI-aware version of the ManyVector library.

Default:

OFFNote

Setting this option to

ONwill trigger several additional options related to MPI.

- MPI_C_COMPILER

mpiccprogramDefault:

- MPI_CXX_COMPILER

mpicxxprogramDefault:

Note

This option is triggered only if MPI is enabled (

ENABLE_MPIisON) and C++ examples are enabled (EXAMPLES_ENABLE_CXXisON). All SUNDIALS solvers can be used from C++ MPI applications by default without setting any additional configuration options other thanENABLE_MPI.

- MPI_Fortran_COMPILER

mpif90programDefault:

Note

This option is triggered only if MPI is enabled (

ENABLE_MPIisON) and Fortran-C support is enabled (EXAMPLES_ENABLE_F2003isON).

- MPIEXEC_EXECUTABLE

Specify the executable for running MPI programs

Default:

mpirunNote

This option is triggered only if MPI is enabled (

ENABLE_MPIisON).

- ENABLE_ONEMKL

Enable oneMKL support.

Default:

OFF

- ONEMKL_DIR

Path to oneMKL installation.

Default: none

- SUNDIALS_ONEMKL_USE_GETRF_LOOP

This advanced debugging option replaces the batched LU factorization with a loop over each system in the batch and a non-batched LU factorization.

Default: OFF

- SUNDIALS_ONEMKL_USE_GETRS_LOOP

This advanced debugging option replaces the batched LU solve with a loop over each system in the batch and a non-batched solve.

Default: OFF

- ENABLE_OPENMP

Enable OpenMP support (build the OpenMP NVector)

Default:

OFF

- ENABLE_PETSC

Enable PETSc support

Default:

OFFNote

See additional information on building with PETSc enabled in §1.2.4.

- PETSC_DIR

Path to PETSc installation

Default: none

- PETSC_LIBRARIES

Semi-colon separated list of PETSc link libraries. Unless provided by the user, this is autopopulated based on the PETSc installation found in

PETSC_DIR.Default: none

- PETSC_INCLUDES

Semi-colon separated list of PETSc include directroies. Unless provided by the user, this is autopopulated based on the PETSc installation found in

PETSC_DIR.Default: none

- ENABLE_PTHREAD

Enable Pthreads support (build the Pthreads NVector)

Default:

OFF

- ENABLE_RAJA

Enable RAJA support.

Default: OFF

Note

You need to enable CUDA or HIP in order to build the RAJA vector module.

- SUNDIALS_RAJA_BACKENDS

If building SUNDIALS with RAJA support, this sets the RAJA backend to target. Values supported are CUDA, HIP, or SYCL.

Default: CUDA

- ENABLE_SUPERLUDIST

Enable SuperLU_DIST support

Default:

OFFNote

See additional information on building wtih SuperLU_DIST enabled in §1.2.4.

- SUPERLUDIST_DIR

Path to SuperLU_DIST installation.

Default: none

- SUPERLUDIST_OpenMP

Enable SUNDIALS support for SuperLU_DIST built with OpenMP

Default: none

Note: SuperLU_DIST must be built with OpenMP support for this option to function. Additionally the environment variable

OMP_NUM_THREADSmust be set to the desired number of threads.

- SUPERLUDIST_INCLUDE_DIRS

List of include paths for SuperLU_DIST (under a typical SuperLU_DIST install, this is typically the SuperLU_DIST

SRCdirectory)Default: none

Note

This is an advanced option. Prefer to use

SUPERLUDIST_DIR.

- SUPERLUDIST_LIBRARIES

Semi-colon separated list of libraries needed for SuperLU_DIST

Default: none

Note

This is an advanced option. Prefer to use

SUPERLUDIST_DIR.

- SUPERLUDIST_INCLUDE_DIR

Path to SuperLU_DIST header files (under a typical SuperLU_DIST install, this is typically the SuperLU_DIST

SRCdirectory)Default: none

Note

This is an advanced option. This option is deprecated. Use

SUPERLUDIST_INCLUDE_DIRS.

- SUPERLUDIST_LIBRARY_DIR

Path to SuperLU_DIST installed library files

Default: none

Note

This option is deprecated. Use

SUPERLUDIST_DIR.

- ENABLE_SUPERLUMT

Enable SuperLU_MT support

Default:

OFFNote

See additional information on building with SuperLU_MT enabled in §1.2.4.

- SUPERLUMT_INCLUDE_DIR

Path to SuperLU_MT header files (under a typical SuperLU_MT install, this is typically the SuperLU_MT

SRCdirectory)Default: none

- SUPERLUMT_LIBRARY_DIR

Path to SuperLU_MT installed library files

Default: none

- SUPERLUMT_THREAD_TYPE

Must be set to Pthread or OpenMP, depending on how SuperLU_MT was compiled.

Default: Pthread

- ENABLE_SYCL

Enable SYCL support.

Default: OFF

Note

Building with SYCL enabled requires a compiler that supports a subset of the of SYCL 2020 specification (specifically

sycl/sycl.hppmust be available).CMake does not currently support autodetection of SYCL compilers and

CMAKE_CXX_COMPILERmust be set to a valid SYCL compiler. At present the only supported SYCL compilers are the Intel oneAPI compilers i.e.,dpcppandicpx. When usingicpxthe-fsyclflag and any ahead of time compilation flags must be added toCMAKE_CXX_FLAGS.

- SUNDIALS_SYCL_2020_UNSUPPORTED

This advanced option disables the use of some features from the SYCL 2020 standard in SUNDIALS libraries and examples. This can be used to work around some cases of incomplete compiler support for SYCL 2020.

Default: OFF

- ENABLE_CALIPER

Enable CALIPER support

Default: OFF

Note

Using Caliper requires setting

SUNDIALS_BUILD_WITH_PROFILINGtoON.

- CALIPER_DIR

Path to the root of a Caliper installation

Default: None

- ENABLE_ADIAK

Enable Adiak support

Default: OFF

- adiak_DIR

Path to the root of an Adiak installation

Default: None

- SUNDIALS_LAPACK_CASE

Specify the case to use in the Fortran name-mangling scheme, options are:

lowerorupperDefault:

Note

The build system will attempt to infer the Fortran name-mangling scheme using the Fortran compiler. This option should only be used if a Fortran compiler is not available or to override the inferred or default (

lower) scheme if one can not be determined. If used,SUNDIALS_LAPACK_UNDERSCORESmust also be set.

- SUNDIALS_LAPACK_UNDERSCORES

Specify the number of underscores to append in the Fortran name-mangling scheme, options are:

none,one, ortwoDefault:

Note

The build system will attempt to infer the Fortran name-mangling scheme using the Fortran compiler. This option should only be used if a Fortran compiler is not available or to override the inferred or default (

one) scheme if one can not be determined. If used,SUNDIALS_LAPACK_CASEmust also be set.

- SUNDIALS_INDEX_TYPE

Integer type used for SUNDIALS indices. The size must match the size provided for the

SUNDIALS_INDEX_SIZEoption.Default: Automatically determined based on

SUNDIALS_INDEX_SIZENote

In past SUNDIALS versions, a user could set this option to

INT64_Tto use 64-bit integers, orINT32_Tto use 32-bit integers. Starting in SUNDIALS 3.2.0, these special values are deprecated. For SUNDIALS 3.2.0 and up, a user will only need to use theSUNDIALS_INDEX_SIZEoption in most cases.

- SUNDIALS_INDEX_SIZE

Integer size (in bits) used for indices in SUNDIALS, options are:

32or64Default:

64Note

The build system tries to find an integer type of appropriate size. Candidate 64-bit integer types are (in order of preference):

int64_t,__int64,long long, andlong. Candidate 32-bit integers are (in order of preference):int32_t,int, andlong. The advanced option,SUNDIALS_INDEX_TYPEcan be used to provide a type not listed here.

- SUNDIALS_PRECISION

The floating-point precision used in SUNDIALS packages and class implementations, options are:

double,single, orextendedDefault:

double

- SUNDIALS_MATH_LIBRARY

The standard C math library (e.g.,

libm) to link with.Default:

-lmon Unix systems, none otherwise

- SUNDIALS_INSTALL_CMAKEDIR

Installation directory for the SUNDIALS cmake files (relative to

CMAKE_INSTALL_PREFIX).Default:

CMAKE_INSTALL_PREFIX/cmake/sundials

- ENABLE_XBRAID

Enable or disable the ARKStep + XBraid interface.

Default:

OFFNote

See additional information on building with XBraid enabled in §1.2.4.

- XBRAID_DIR

The root directory of the XBraid installation.

Default:

OFF

- XBRAID_INCLUDES

Semi-colon separated list of XBraid include directories. Unless provided by the user, this is autopopulated based on the XBraid installation found in

XBRAID_DIR.Default: none

- XBRAID_LIBRARIES

Semi-colon separated list of XBraid link libraries. Unless provided by the user, this is autopopulated based on the XBraid installation found in

XBRAID_DIR.Default: none

- USE_XSDK_DEFAULTS

Enable xSDK (see https://xsdk.info for more information) default configuration settings. This sets

CMAKE_BUILD_TYPEtoDebug,SUNDIALS_INDEX_SIZEto 32 andSUNDIALS_PRECISIONto double.Default:

OFF

1.2.3. Configuration examples

The following examples will help demonstrate usage of the CMake configure options.

To configure SUNDIALS using the default C and Fortran compilers,

and default mpicc and mpif90 parallel compilers,

enable compilation of examples, and install libraries, headers, and

example sources under subdirectories of /home/myname/sundials/, use:

% cmake \

> -DCMAKE_INSTALL_PREFIX=/home/myname/sundials/instdir \

> -DEXAMPLES_INSTALL_PATH=/home/myname/sundials/instdir/examples \

> -DENABLE_MPI=ON \

> /home/myname/sundials/srcdir

% make install

To disable installation of the examples, use:

% cmake \

> -DCMAKE_INSTALL_PREFIX=/home/myname/sundials/instdir \

> -DEXAMPLES_INSTALL_PATH=/home/myname/sundials/instdir/examples \

> -DENABLE_MPI=ON \

> -DEXAMPLES_INSTALL=OFF \

> /home/myname/sundials/srcdir

% make install

1.2.4. Working with external Libraries

The SUNDIALS suite contains many options to enable implementation flexibility when developing solutions. The following are some notes addressing specific configurations when using the supported third party libraries.

1.2.4.1. Building with Ginkgo

Ginkgo is a high-performance linear algebra library for

manycore systems, with a focus on solving sparse linear systems. It is implemented using modern

C++ (you will need at least a C++14 compliant compiler to build it), with GPU kernels implemented in

CUDA (for NVIDIA devices), HIP (for AMD devices) and SYCL/DPC++ (for Intel devices and other

supported hardware). To enable Ginkgo in SUNDIALS, set the ENABLE_GINKGO to ON

and provide the path to the root of the Ginkgo installation in Ginkgo_DIR.

Additionally, SUNDIALS_GINKGO_BACKENDS must be set to a list of Ginkgo target

architecutres/executors. E.g.,

% cmake \

> -DENABLE_GINKGO=ON \

> -DGinkgo_DIR=/path/to/ginkgo/installation \

> -DSUNDIALS_GINKGO_BACKENDS="REF;OMP;CUDA" \

> /home/myname/sundials/srcdir

The SUNDIALS interfaces to Ginkgo are not compatible with SUNDIALS_PRECISION set

to extended.

1.2.4.2. Building with Kokkos

Kokkos is a modern C++ (requires

at least C++14) programming model for witting performance portable code for

multicore CPU and GPU-based systems including NVIDIA, AMD, and Intel

accelerators. To enable Kokkos in SUNDIALS, set the ENABLE_KOKKOS to

ON and provide the path to the root of the Kokkos installation in

Kokkos_DIR. Additionally, the

Kokkos-Kernels library provides

common computational kernels for linear algebra. To enable Kokkos-Kernels in

SUNDIALS, set the ENABLE_KOKKOS_KERNELS to ON and provide the

path to the root of the Kokkos-Kernels installation in

KokkosKernels_DIR e.g.,

% cmake \

> -DENABLE_KOKKOS=ON \

> -DKokkos_DIR=/path/to/kokkos/installation \

> -DENABLE_KOKKOS_KERNELS=ON \

> -DKokkosKernels_DIR=/path/to/kokkoskernels/installation \

> /home/myname/sundials/srcdir

Note

The minimum supported version of Kokkos-Kernels 3.7.00.

1.2.4.3. Building with LAPACK

To enable LAPACK, set the ENABLE_LAPACK option to ON.

If the directory containing the LAPACK library is in the

LD_LIBRARY_PATH environment variable, CMake will set the

LAPACK_LIBRARIES variable accordingly, otherwise CMake will

attempt to find the LAPACK library in standard system locations. To

explicitly tell CMake what library to use, the LAPACK_LIBRARIES

variable can be set to the desired libraries required for LAPACK.

% cmake \

> -DCMAKE_INSTALL_PREFIX=/home/myname/sundials/instdir \

> -DEXAMPLES_INSTALL_PATH=/home/myname/sundials/instdir/examples \

> -DENABLE_LAPACK=ON \

> -DLAPACK_LIBRARIES=/mylapackpath/lib/libblas.so;/mylapackpath/lib/liblapack.so \

> /home/myname/sundials/srcdir

% make install

Note

If a working Fortran compiler is not available to infer the Fortran

name-mangling scheme, the options SUNDIALS_F77_FUNC_CASE and

SUNDIALS_F77_FUNC_UNDERSCORES must be set in order to bypass the check

for a Fortran compiler and define the name-mangling scheme. The defaults for

these options in earlier versions of SUNDIALS were lower and one,

respectively.

SUNDIALS has been tested with OpenBLAS 0.3.18.

1.2.4.4. Building with KLU

KLU is a software package for the direct solution of sparse nonsymmetric linear systems of equations that arise in circuit simulation and is part of SuiteSparse, a suite of sparse matrix software. The library is developed by Texas A&M University and is available from the SuiteSparse GitHub repository.

To enable KLU, set ENABLE_KLU to ON, set KLU_INCLUDE_DIR to the

include path of the KLU installation and set KLU_LIBRARY_DIR

to the lib path of the KLU installation. In that case, the CMake configure

will result in populating the following variables: AMD_LIBRARY,

AMD_LIBRARY_DIR, BTF_LIBRARY, BTF_LIBRARY_DIR,

COLAMD_LIBRARY, COLAMD_LIBRARY_DIR, and KLU_LIBRARY.

For SuiteSparse 7.4.0 and newer, the necessary information can also be gathered

from a CMake import target. If SuiteSparse is installed in a non-default

prefix, the path to the CMake Config file can be set using

CMAKE_PREFIX_PATH. In that case, the CMake configure step won’t populate

the previously mentioned variables. It is still possible to set

KLU_INCLUDE_DIR and KLU_LIBRARY_DIR which take precedence over a

potentially installed CMake import target file.

In either case, a CMake target SUNDIALS::KLU will be created if the KLU

library could be found. Dependent targets should link to that target.

SUNDIALS has been tested with SuiteSparse version 5.10.1.

1.2.4.5. Building with SuperLU_DIST

SuperLU_DIST is a general purpose library for the direct solution of large, sparse, nonsymmetric systems of linear equations in a distributed memory setting. The library is developed by Lawrence Berkeley National Laboratory and is available from the SuperLU_DIST GitHub repository.

To enable SuperLU_DIST, set ENABLE_SUPERLUDIST to ON, set

SUPERLUDIST_DIR to the path where SuperLU_DIST is installed.

If SuperLU_DIST was built with OpenMP then the option SUPERLUDIST_OpenMP

and ENABLE_OPENMP should be set to ON.

SUNDIALS supports SuperLU_DIST v7.0.0 – v8.x.x and has been tested with v7.2.0 and v8.1.0.

1.2.4.6. Building with SuperLU_MT

SuperLU_MT is a general purpose library for the direct solution of large, sparse, nonsymmetric systems of linear equations on shared memory parallel machines. The library is developed by Lawrence Berkeley National Laboratory and is available from the SuperLU_MT GitHub repository.

To enable SuperLU_MT, set ENABLE_SUPERLUMT to ON, set

SUPERLUMT_INCLUDE_DIR to the SRC path of the SuperLU_MT

installation, and set the variable SUPERLUMT_LIBRARY_DIR to the

lib path of the SuperLU_MT installation. At the same time, the

variable SUPERLUMT_LIBRARIES must be set to a semi-colon separated

list of other libraries SuperLU_MT depends on. For example, if

SuperLU_MT was build with an external blas library, then include the

full path to the blas library in this list. Additionally, the

variable SUPERLUMT_THREAD_TYPE must be set to either Pthread

or OpenMP.

Do not mix thread types when building SUNDIALS solvers.

If threading is enabled for SUNDIALS by having either

ENABLE_OPENMP or ENABLE_PTHREAD set to ON then SuperLU_MT

should be set to use the same threading type.

SUNDIALS has been tested with SuperLU_MT version 3.1.

1.2.4.7. Building with PETSc

The Portable, Extensible Toolkit for Scientific Computation (PETSc) is a suite of data structures and routines for simulating applications modeled by partial differential equations. The library is developed by Argonne National Laboratory and is available from the PETSc GitLab repository.

To enable PETSc, set ENABLE_PETSC to ON, and set PETSC_DIR to the

path of the PETSc installation. Alternatively, a user can provide a list of

include paths in PETSC_INCLUDES and a list of complete paths to the PETSc

libraries in PETSC_LIBRARIES.

SUNDIALS is regularly tested with the latest PETSc versions, specifically up to version 3.18.1 as of SUNDIALS version v7.0.0. SUNDIALS requires PETSc 3.5.0 or newer.

1.2.4.8. Building with hypre

hypre is a library of high performance preconditioners and solvers featuring multigrid methods for the solution of large, sparse linear systems of equations on massively parallel computers. The library is developed by Lawrence Livermore National Laboratory and is available from the hypre GitHub repository.

To enable hypre, set ENABLE_HYPRE to ON, set HYPRE_INCLUDE_DIR

to the include path of the hypre installation, and set the variable

HYPRE_LIBRARY_DIR to the lib path of the hypre installation.

Note

SUNDIALS must be configured so that SUNDIALS_INDEX_SIZE is compatible

with HYPRE_BigInt in the hypre installation.

SUNDIALS is regularly tested with the latest versions of hypre, specifically up to version 2.26.0 as of SUNDIALS version v7.0.0.

1.2.4.9. Building with MAGMA

The Matrix Algebra on GPU and Multicore Architectures (MAGMA) project provides a dense linear algebra library similar to LAPACK but targeting heterogeneous architectures. The library is developed by the University of Tennessee and is available from the UTK webpage.

To enable the SUNDIALS MAGMA interface set ENABLE_MAGMA to ON,

MAGMA_DIR to the MAGMA installation path, and SUNDIALS_MAGMA_BACKENDS to

the desired MAGMA backend to use with SUNDIALS e.g., CUDA or HIP.

SUNDIALS has been tested with MAGMA version v2.6.1 and v2.6.2.

1.2.4.10. Building with oneMKL

The Intel oneAPI Math Kernel Library (oneMKL) includes CPU and DPC++ interfaces for LAPACK dense linear algebra routines. The SUNDIALS oneMKL interface targets the DPC++ routines, to utilize the CPU routine see §1.2.4.3.

To enable the SUNDIALS oneMKL interface set ENABLE_ONEMKL to ON and

ONEMKL_DIR to the oneMKL installation path.

SUNDIALS has been tested with oneMKL version 2021.4.

1.2.4.11. Building with CUDA

The NVIDIA CUDA Toolkit provides a development environment for GPU-accelerated computing with NVIDIA GPUs. The CUDA Toolkit and compatible NVIDIA drivers are available from the NVIDIA developer website.

To enable CUDA, set ENABLE_CUDA to ON. If CUDA is installed in a

nonstandard location, you may be prompted to set the variable

CUDA_TOOLKIT_ROOT_DIR with your CUDA Toolkit installation path. To enable

CUDA examples, set EXAMPLES_ENABLE_CUDA to ON.

SUNDIALS has been tested with the CUDA toolkit versions 10 and 11.

1.2.4.12. Building with HIP

HIP(heterogeneous-compute interface for portability) allows developers to create portable applications for AMD and NVIDIA GPUs. HIP can be obtained from HIP GitHub repository.

To enable HIP, set ENABLE_HIP to ON and set AMDGPU_TARGETS to the desired target(ex. gfx705).

In addition, set CMAKE_C_COMPILER and CMAKE_CXX_COMPILER to point to an installation of hipcc.

SUNDIALS has been tested with HIP versions between 5.0.0 to 5.4.3.

1.2.4.13. Building with RAJA

RAJA is a performance portability layer developed by Lawrence Livermore National Laboratory and can be obtained from the RAJA GitHub repository.

Building SUNDIALS RAJA modules requires a CUDA, HIP, or SYCL

enabled RAJA installation. To enable RAJA, set ENABLE_RAJA to ON, set

SUNDIALS_RAJA_BACKENDS to the desired backend (CUDA, HIP, or

SYCL), and set ENABLE_CUDA, ENABLE_HIP, or ENABLE_SYCL to

ON depending on the selected backend. If RAJA is installed in a nonstandard

location you will be prompted to set the variable RAJA_DIR with

the path to the RAJA CMake configuration file. To enable building the

RAJA examples set EXAMPLES_ENABLE_CXX to ON.

SUNDIALS has been tested with RAJA version 0.14.0.

1.2.4.14. Building with XBraid

XBraid is parallel-in-time library implementing an optimal-scaling multigrid reduction in time (MGRIT) solver. The library is developed by Lawrence Livermore National Laboratory and is available from the XBraid GitHub repository.

To enable XBraid support, set ENABLE_XBRAID to ON, set XBRAID_DIR to

the root install location of XBraid or the location of the clone of the XBraid

repository.

Note

At this time the XBraid types braid_Int and braid_Real are hard-coded

to int and double respectively. As such SUNDIALS must be configured

with SUNDIALS_INDEX_SIZE set to 32 and SUNDIALS_PRECISION set to

double. Additionally, SUNDIALS must be configured with ENABLE_MPI set

to ON.

SUNDIALS has been tested with XBraid version 3.0.0.

1.2.5. Testing the build and installation

If SUNDIALS was configured with EXAMPLES_ENABLE_<language> options

to ON, then a set of regression tests can be run after building

with the make command by running:

% make test

Additionally, if EXAMPLES_INSTALL was also set to ON, then a

set of smoke tests can be run after installing with the make install

command by running:

% make test_install

1.2.6. Building and Running Examples

Each of the SUNDIALS solvers is distributed with a set of examples demonstrating

basic usage. To build and install the examples, set at least of the

EXAMPLES_ENABLE_<language> options to ON, and set EXAMPLES_INSTALL

to ON. Specify the installation path for the examples with the variable

EXAMPLES_INSTALL_PATH. CMake will generate CMakeLists.txt configuration

files (and Makefile files if on Linux/Unix) that reference the installed

SUNDIALS headers and libraries.

Either the CMakeLists.txt file or the traditional Makefile may be used

to build the examples as well as serve as a template for creating user developed

solutions. To use the supplied Makefile simply run make to compile and

generate the executables. To use CMake from within the installed example

directory, run cmake (or ccmake or cmake-gui to use the GUI)

followed by make to compile the example code. Note that if CMake is used,

it will overwrite the traditional Makefile with a new CMake-generated

Makefile.

The resulting output from running the examples can be compared with example output bundled in the SUNDIALS distribution.

Note

There will potentially be differences in the output due to machine architecture, compiler versions, use of third party libraries etc.

1.2.7. Configuring, building, and installing on Windows

CMake can also be used to build SUNDIALS on Windows. To build SUNDIALS for use with Visual Studio the following steps should be performed:

Unzip the downloaded tar file(s) into a directory. This will be the

SOLVERDIRCreate a separate

BUILDDIROpen a Visual Studio Command Prompt and cd to

BUILDDIRRun

cmake-gui ../SOLVERDIRHit Configure

Check/Uncheck solvers to be built

Change

CMAKE_INSTALL_PREFIXtoINSTDIRSet other options as desired

Hit Generate

Back in the VS Command Window:

Run

msbuild ALL_BUILD.vcxprojRun

msbuild INSTALL.vcxproj

The resulting libraries will be in the INSTDIR.

The SUNDIALS project can also now be opened in Visual Studio. Double click on

the ALL_BUILD.vcxproj file to open the project. Build the whole solution

to create the SUNDIALS libraries. To use the SUNDIALS libraries in your own

projects, you must set the include directories for your project, add the

SUNDIALS libraries to your project solution, and set the SUNDIALS libraries as

dependencies for your project.

1.2.8. Installed libraries and exported header files

Using the CMake SUNDIALS build system, the command

$ make install

will install the libraries under LIBDIR and the public header files under

INCLUDEDIR. The values for these directories are INSTDIR/lib and

INSTDIR/include, respectively. The location can be changed by setting the

CMake variable CMAKE_INSTALL_PREFIX. Although all installed libraries

reside under LIBDIR/lib, the public header files are further organized into

subdirectories under INCLUDEDIR/include.

The installed libraries and exported header files are listed for reference in

the table below. The file extension .LIB is typically

.so for shared libraries and .a for static libraries. Note that, in this

table names are relative to LIBDIR for libraries and to INCLUDEDIR for

header files.

Warning

SUNDIALS installs some header files to INSTDIR/include/sundials/priv.

All of the header files in this directory are private and should not

be included in user code. The private headers are subject to change

without any notice and relying on them may break your code.

1.2.9. Using SUNDIALS in your prpject

After building and installing SUNDIALS, using SUNDIALS in your application involves two steps: including the right header files and linking to the right libraries.

Depending on what features of SUNDIALS that your application uses, the header files needed will vary. For example, if you want to use CVODE for serial computations you need the following includes:

#include <cvode/cvode.h>

#include <nvector/nvector_serial.h>

If you wanted to use CVODE with the GMRES linear solver and our CUDA enabled vector:

#include <cvode/cvode.h>

#include <nvector/nvector_cuda.h>

#include <sunlinsol/sunlinsol_spgmr.h>

The story is similar for linking to SUNDIALS. Starting in v7.0.0, all

applications will need to link to libsundials_core. Furthermore, depending

on the packages and modules of SUNDIALS of interest an application will need to

link to a few more libraries. Using the same examples as for the includes, we

would need to also link to libsundials_cvode, libsundials_nvecserial for

the first example and libsundials_cvode, libsundials_nveccuda,

libsundials_sunlinsolspgmr for the second.

Refer to the documentations sections for the individual packages and modules of SUNDIALS that interest you for the proper includes and libraries to link to.

1.2.10. Using SUNDIALS as a Third Party Library in other CMake Projects

The make install command will also install a CMake package configuration file

that other CMake projects can load to get all the information needed to build

against SUNDIALS. In the consuming project’s CMake code, the find_package

command may be used to search for the configuration file, which will be

installed to instdir/SUNDIALS_INSTALL_CMAKEDIR/SUNDIALSConfig.cmake

alongside a package version file

instdir/SUNDIALS_INSTALL_CMAKEDIR/SUNDIALSConfigVersion.cmake. Together

these files contain all the information the consuming project needs to use

SUNDIALS, including exported CMake targets. The SUNDIALS exported CMake targets

follow the same naming convention as the generated library binaries, e.g. the

exported target for CVODE is SUNDIALS::cvode. The CMake code snipped

below shows how a consuming project might leverage the SUNDIALS package

configuration file to build against SUNDIALS in their own CMake project.

project(MyProject)

# Set the variable SUNDIALS_DIR to the SUNDIALS instdir.

# When using the cmake CLI command, this can be done like so:

# cmake -D SUNDIALS_DIR=/path/to/sundials/installation

# Find any SUNDIALS version...

find_package(SUNDIALS REQUIRED)

# ... or find any version newer than some minimum...

find_package(SUNDIALS 7.1.0 REQUIRED)

# ... or find a version in a range

find_package(SUNDIALS 7.0.0...7.1.0 REQUIRED)

add_executable(myexec main.c)

# Link to SUNDIALS libraries through the exported targets.

# This is just an example, users should link to the targets appropriate

# for their use case.

target_link_libraries(myexec PUBLIC SUNDIALS::cvode SUNDIALS::nvecpetsc)

Note

Changed in version x.y.z: A single version provided to find_package denotes the minimum version

of SUNDIALS to look for, and any version equal or newer than what is

specified will match. In prior versions SUNDIALSConfig.cmake required

the version found to have the same major version number as the single

version provided to find_package.

1.2.11. Table of SUNDIALS libraries and header files

Core |

Libraries |

|

Headers |

|

|

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

NVECTOR Modules |

||

SERIAL |

Libraries |

|

Headers |

|

|

PARALLEL |

Libraries |

|

Headers |

|

|

OPENMP |

Libraries |

|

Headers |

|

|

PTHREADS |

Libraries |

|

Headers |

|

|

PARHYP |

Libraries |

|

Headers |

|

|

PETSC |

Libraries |

|

Headers |

|

|

CUDA |

Libraries |

|

Headers |

|

|

HIP |

Libraries |

|

Headers |

|

|

RAJA |

Libraries |

|

|

||

Headers |

|

|

SYCL |

Libraries |

|

Headers |

|

|

MANYVECTOR |

Libraries |

|

Headers |

|

|

MPIMANYVECTOR |

Libraries |

|

Headers |

|

|

MPIPLUSX |

Libraries |

|

Headers |

|

|

SUNMATRIX Modules |

||

BAND |

Libraries |

|

Headers |

|

|

CUSPARSE |

Libraries |

|

Headers |

|

|

DENSE |

Libraries |

|

Headers |

|

|

Ginkgo |

Headers |

|

MAGMADENSE |

Libraries |

|

Headers |

|

|

ONEMKLDENSE |

Libraries |

|

Headers |

|

|

SPARSE |

Libraries |

|

Headers |

|

|

SLUNRLOC |

Libraries |

|

Headers |

|

|

SUNLINSOL Modules |

||

BAND |

Libraries |

|

Headers |

|

|

CUSOLVERSP_BATCHQR |

Libraries |

|

Headers |

|

|

DENSE |

Libraries |

|

Headers |

|

|

Ginkgo |

Headers |

|

KLU |

Libraries |

|

Headers |

|

|

LAPACKBAND |

Libraries |

|

Headers |

|

|

LAPACKDENSE |

Libraries |

|

Headers |

|

|

MAGMADENSE |

Libraries |

|

Headers |

|

|

ONEMKLDENSE |

Libraries |

|

Headers |

|

|

PCG |

Libraries |

|

Headers |

|

|

SPBCGS |

Libraries |

|

Headers |

|

|

SPFGMR |

Libraries |

|

Headers |

|

|

SPGMR |

Libraries |

|

Headers |

|

|

SPTFQMR |

Libraries |

|

Headers |

|

|

SUPERLUDIST |

Libraries |

|

Headers |

|

|

SUPERLUMT |

Libraries |

|

Headers |

|

|

SUNNONLINSOL Modules |

||

NEWTON |

Libraries |

|

Headers |

|

|

FIXEDPOINT |

Libraries |

|

Headers |

|

|

PETSCSNES |

Libraries |

|

Headers |

|

|

SUNMEMORY Modules |

||

SYSTEM |

Libraries |

|

Headers |

|

|

CUDA |

Libraries |

|

Headers |

|

|

HIP |

Libraries |

|

Headers |

|

|

SYCL |

Libraries |

|

Headers |

|

|

SUNDIALS Packages |

||

CVODE |

Libraries |

|

Headers |

|

|

|

||

|

||

|

||

|

||

|

||

|

||

CVODES |

Libraries |

|

Headers |

|

|

|

||

|

||

|

||

|

||

|

||

ARKODE |

Libraries |

|

|

||

Headers |

|

|

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

IDA |

Libraries |

|

Headers |

|

|

|

||

|

||

|

||

IDAS |

Libraries |

|

Headers |

|

|

|

||

|

||

KINSOL |

Libraries |

|

Headers |

|

|

|

||

|

||

|

||

1.2.12. Installing SUNDIALS on HPC Clusters

This section is a guide for installing SUNDIALS on specific HPC clusters. In general, the procedure is the same as described previously for Linux machines. The main differences are in the modules and environment variables that are specific to different HPC clusters. We aim to keep this section as up to date as possible, but it may lag the latest software updates to each cluster.

1.2.12.1. Frontier

Frontier is an Exascale supercomputer at the Oak Ridge Leadership Computing Facility. If you are new to this system, then we recommend that you review the Frontier user guide.

A Standard Installation

Clone SUNDIALS:

git clone https://github.com/LLNL/sundials.git && cd sundials

Next we load the modules and set the environment variables needed to build SUNDIALS. This configuration enables both MPI and HIP support for distributed and GPU parallelism. It uses the HIP compiler for C and C++ and the Cray Fortran compiler. Other configurations are possible.

# required dependencies

module load PrgEnv-cray-amd/8.5.0

module load craype-accel-amd-gfx90a

module load rocm/5.3.0

module load cmake/3.23.2

# GPU-aware MPI

export MPICH_GPU_SUPPORT_ENABLED=1

# compiler environment hints

export CC=$(which hipcc)

export CXX=$(which hipcc)

export FC=$(which ftn)

export CFLAGS="-I${ROCM_PATH}/include"

export CXXFLAGS="-I${ROCM_PATH}/include -Wno-pass-failed"

export LDFLAGS="-L${ROCM_PATH}/lib -lamdhip64 ${PE_MPICH_GTL_DIR_amd_gfx90a} -lmpi_gtl_hsa"

Now we can build SUNDIALS. In general, this is the same procedure described in the previous sections. The following command builds and installs SUNDIALS with MPI, HIP, and the Fortran interface enabled, where <install path> is your desired installation location, and <account> is your allocation account on Frontier:

cmake -S . -B builddir -DCMAKE_INSTALL_PREFIX=<install path> -DAMDGPU_TARGETS=gfx90a \

-DENABLE_HIP=ON -DENABLE_MPI=ON -DBUILD_FORTRAN_MODULE_INTERFACE=ON

cd builddir

make -j8 install

# Need an allocation to run the tests:

salloc -A <account> -t 10 -N 1 -p batch

make test

make test_install_all

1.2.13. Building with SUNDIALS Addons

SUNDIALS “addons” are community developed code additions for SUNDIALS that can be subsumed by the SUNDIALS build system so that they have full access to all internal SUNDIALS symbols. The intent is for SUNDIALS addons to function as if they are part of the SUNDIALS library, while allowing them to potentially have different licenses (although we encourage BSD-3-Clause still), code style (although we encourage them to follow the SUNDIALS style outlined here).

Warning

SUNDIALS addons are not maintained by the SUNDIALS team and may come with different licenses. Use them at your own risk.

To build with SUNDIALS addons,

Clone/copy the addon(s) into

<sundials root>/external/Copy the

sundials-addon-exampleblock in the<sundials root>/external/CMakeLists.txt, paste it below the example block, and modify the path listed for your own external addon(s).When building SUNDIALS, set the CMake option

SUNDIALS_ENABLE_EXTERNAL_ADDONSto ONBuild SUNDIALS as usual.